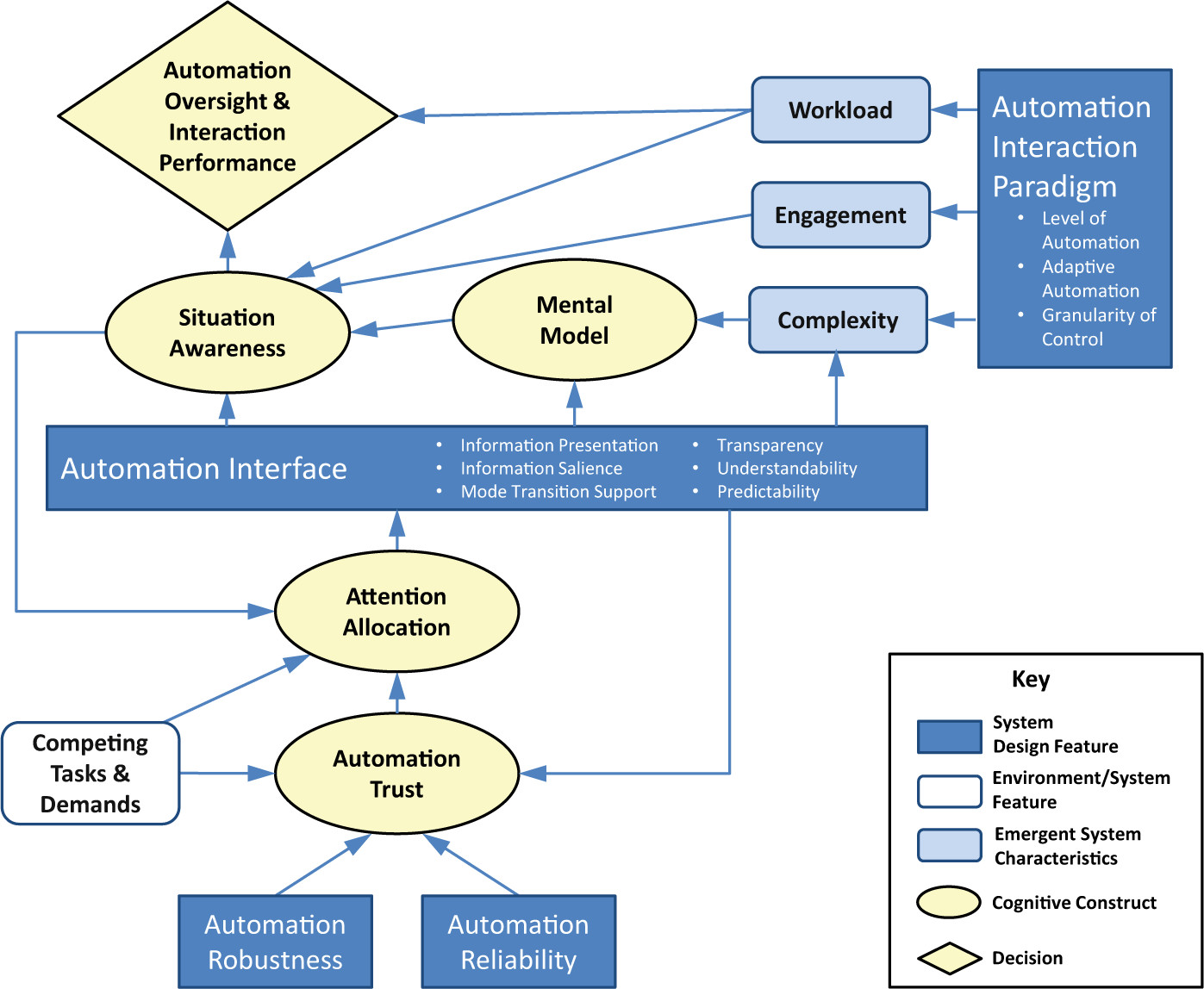

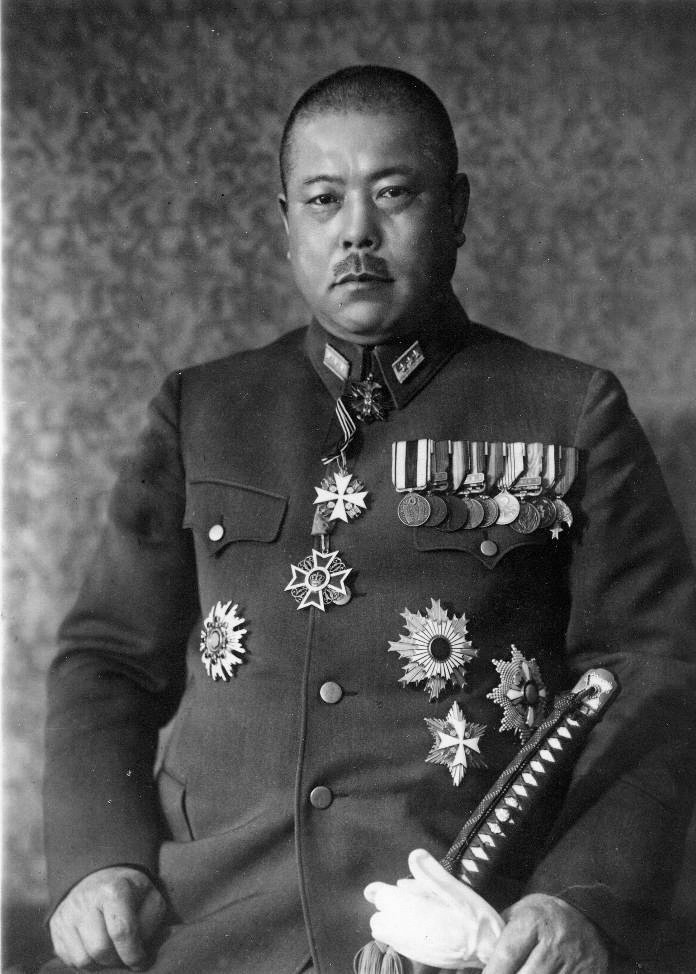

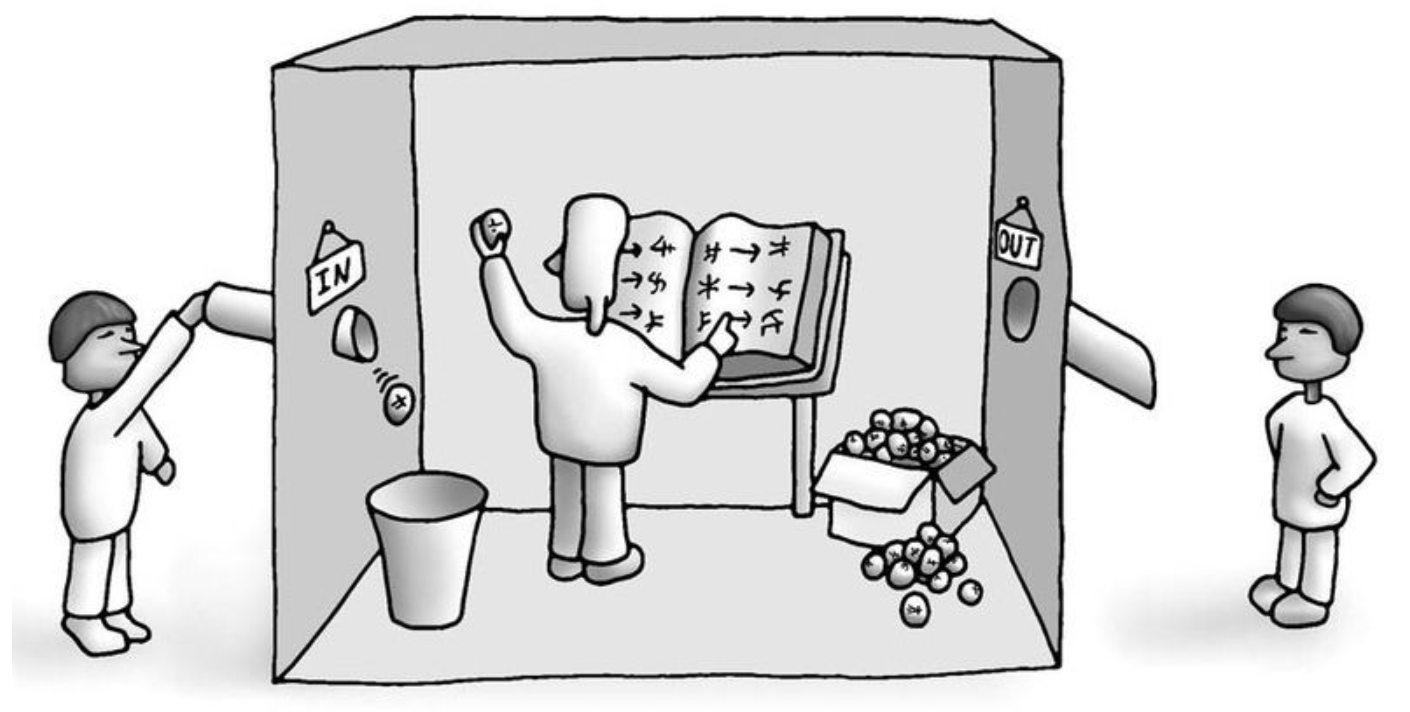

class: center, middle, inverse, title-slide # War, Technology & Innovation ## Human Autonomy in Distributed Systems ### Jack McDonald ### 2020-01-27 --- class: inverse # Lecture Outline .pull-left[ Identifying an Appropriate Theoretical Frame Technology and Human Autonomy ] .pull-right[ ] ??? Theoretical Frame: Human Autonomy in Distributed Systems We’ll start this lecture by discussing what is meant by a theoretical framework, and how to figure out an appropriate research framework to tackle a given research problem. In this lecture I’ll discuss a number of different ways in which the development of autonomous weapon systems can be approached from an academic perspective, and how each would influence subsequent research questions, and research methods. We’ll be covering arguments over utility and reliability from a defence planning/strategic perspective, alongside the rise of practical ethics, and military ethics, as a means of analysing emerging technologies. We will be looking at accidents, where automated/integrated systems result in the “wrong” target being destroyed, and their centrality, or non-centrality, in distinct bodies of academic research. Discussion question: What can the controversy surrounding the Vincennes disaster tell us about the impact of technological change on command responsibility? Research discussion question: What are the important theoretical commitments of your research? Reading: Scharre, Paul. Army of None: Autonomous Weapons and the Future of War. WW Norton & Company, (2018). Chapter 10 Perrow, Charles. “Normal Accident at Three Mile Island.” Society 18, no. 5 (1981): 17–26. Pidgeon, Nick. “In Retrospect: Normal Accidents.” Nature 477 (2011): 404 EP. https://doi.org/10.1038/477404a. asd --- class: inverse # Selecting Theoretical Frameworks ??? --- # The Importance of Prior Work (& Penguins) .left-column[    ] .right-column[ If there is no prior work on a topic, you are going to have a _really_ hard time Try to pick a research problem where - There are plenty of related pre-existing arguments, or - It sits at the intersection of two established bodies of theory Baggage and foundations Disputes, blind spots, evidence, re-framing ] ??? --- # Picking a Fight: Old Theory, New Case .pull-left[ This old theory predicts __X__, in this case, __Y__ occurs, why is that? What improvement to old theory might help us to explain the occurance of __Y__? How might we improve this aspect of old theory? ] -- .pull-right[ This pre-existing theoretical dispute between __X__ and __Y__ predicts different outcomes, yet __Z__ has not been examined in light of this dispute. Given that __X__ predicts __A__, why did __B__ happen in case __Z__? What does case __Z__ tell us about dispute between __X__ and __Y__? ] ??? --- # Picking a Fight: New Theory, Old Case .pull-left[ Current theory examines dimensions __X__ and __Y__ of case __Z__, why does current theory fail to explain dimension __A__? Given the importance of __Z__ to current theory, why does current theory fail to explain dimension __A__? Why does new theory __B__ provide a better explanation of __A__ than current theory? ] -- .pull-right[ New theory __A__ challenges existing theory __B__ by critiquing foundational assumptions __X__, __Y__ and __Z__, does this challenge hold true in the standard case __C__? Given the importance of __B__, why does __A__ provide a better explanation of __C__? What is the importance of the difference between __A__ and __B__ in case __C__? ] ??? --- class: inverse # Small Group Discussion .large[ What are the most important theoretical commitments of your research? ] ??? --- class: inverse # Technology and Human Autonomy ??? Dispute Blind Spot --- # Hooks .small[ Why is AEGIS not defined as a LAWS in the MHC debate? ] -- .pull-left[ .medium[ Dispute (OTNC) > Given that AEGIS can enable lethal action without human supervision, why is it not classed as a LAWS? Blind Spot (NTOC) > Given that lethal decisions in war are produced by distributed sociotechnical systems, why does the MHC debate focus upon individual responsibility? ] ] .pull-right[ .medium[ New Evidence (OTNC) > Given that autonomous systems have existed for decades, why does the MHC debate assume individual human responsibility exists as the baseline? Reframing (OTNC) > Why, despite the widespread integration of autonomous and automated systems into militaries, are LAWS framed as an emerging technology? ] ] ??? --- # Autonomy, Ethics, and War Given that AEGIS can enable lethal action without human supervision, why is it not classed as a LAWS? .pull-left[ Building blocks - Norm theory (IR/security studies) - Moral theory - International law ] .pull-right[ Candidate explanations - Objective fact? - Shared reason for definition - Multiple reasons for definition (strategic choice) ] ??? --- class: inverse # Small Group Discussion .large[What can the controversy surrounding the Vincennes disaster tell us about the impact of technological change on command responsibility?] ??? --- # Meaningful Human Control (1) > meaningful human control has three essential components: > 1. Human operators are making informed, conscious decisions about the use of weapons. > 2. Human operators have sufficient information to ensure the lawfulness of the action they are taking, given what they know about the target, the weapon, and the context for action. > 3. The weapon is designed and tested, and human operators are properly trained, to ensure effective control over the use of the weapon. Michael C. Horowitz and Paul Scharre, _Meaningful Human Control in Weapon Systems_ ??? --- # Meaningful Human Control (2) > These standards of meaningful human control would help ensure that commanders were making conscious decisions, and had enough information when making those decisions to remain legally accountable for their actions. This also allows them to use weapons in a way that ensures moral responsibility for their actions. Furthermore, appropriate design and testing of the weapon, along with proper training for human operators, helps ensure that weapons are controllable and do not pose unacceptable risk. Michael C. Horowitz and Paul Scharre, _Meaningful Human Control in Weapon Systems_ ??? --- # Theories of Human Autonomy .pull-left[  ] .pull-right[ What information do agents need in order to use force in war? Uncertainty and information relationships Implications for individualist approach to ethics of war ] ??? --- # Normal Accidents > Normal accidents emerge from the characteristics of the systems themselves. They cannot be prevented. They are unanticipated. It is not feasible to train, design, or build in such a way as to anticipate all eventualities in complex systems where the parts are tightly coupled. Charles Perrow, _Normal Accident at Three Mile Island_ -- > Major accidents do not spring into life on the day of the visible failure; they have a social and cultural context and a history. Nick Pidgeon, _In retrospect: Normal Accidents_ ??? --- # The U.S.S. Vincennes Incident > The USS Vincennes incident and the Patriot fratricides sit as two opposite cases on the scales of automation versus human control. In the Patriot fratricides, humans trusted the automation too much. The Vincennes incident was caused by human error and more automation might have helped. Paul Scharre, _Army of None_ ??? --- # Autonomy and Technology .pull-left[ > we might argue that autonomy-eroding technologies exert an undue influence to the point that the agent fails to make an independent judgement before deciding or acting. Roger Brownsword, _Autonomy, delegation, and responsibility_ ] .pull-right[  ] ??? --- # Autonomy in Systems .pull-left[ > This _out-of-the-loop_ (OOTL) performance problem creates a significant challenge for autonomy. People are both slow to detect that a problem with automation exists and slow to arrive at a sufficient understanding of the problem to intervene effectively. Mica R. Endsley, _From Here to Autonomy_ ] .pull-right[  ] ??? https://journals.sagepub.com/doi/full/10.1177/0018720816681350 --- # Autonomy, Systems & Command Responsibility .left-column[  ] .right-column[ > Yamashita's conviction was upheld for acts of troops beyond his de facto control, on the ground that operational command responsibility cannot be ceded for the purposes of the doctrine of command responsibility even though the specific aspects of such command are actually ceded to others. This rule, referred to as the "delegation principle," is recognized as a general principle of criminal law. Ilias Bantekas, _The Contemporary Law of Superior Responsibility_ ] ??? https://www.jstor.org/stable/pdf/2555261.pdf?refreqid=excelsior%3A1164a2453c8df7025bc8012f53814393 --- # An Answer to the Puzzle (1) .pull-left[ > Given that AEGIS can enable lethal action without human supervision, why is it not classed as a LAWS? ] .pull-right[ Explanation 1: Strategic alignment/Norm entrepreneurs - NGOs - Academics - Professional Military Academics are the odd one out: why do they not class AEGIS (and like systems) as LAWS? ] ??? --- # An Answer to the Puzzle (2) .pull-left[ Explanation 2: Structure of discussion - Model of individual rational decisionmaker central throughout - Relationship of humans to systems/institutions under-theorised - Impact of technology on human autonomy under-explained ] .pull-right[  ] ???